The Politics of Facial Recognition System: The bias History of Algorithms (2020)

The aim of this argument is to unfold and decode the inherit racial biases and structural racism in FRS (facial recognition systems) through re-reading the essay: Picturing Algorithmic Surveillance co-written by Lucas D. Introna and David Wood[1] after almost two decades.

History of technoscience is indeed shaped around colonial visions. As Donna Haraway in “modest witness” mentioned: “anti-Semitism and misogyny intensified in the Renaissance and Scientific Revolution of early modern Europe, that racism and colonialism flourished in the traveling habits of the cosmopolitan Enlightenment, and that the intensified misery of billions of men and women seems organically rooted in the freedoms of transnational capitalism and technoscience.” [2]

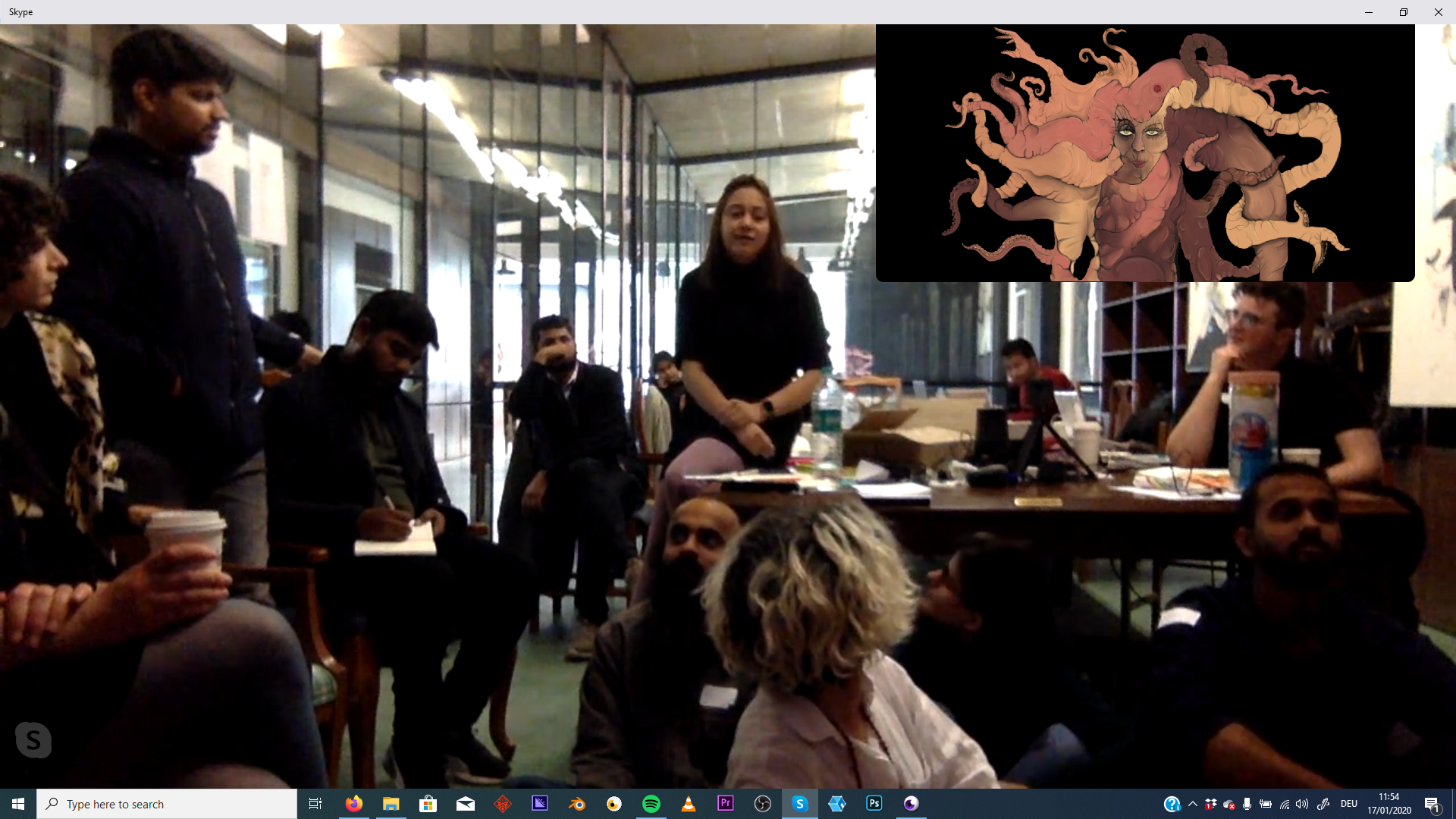

This visual essay presented at the workshop: Code, Layers, Infrastructures Convened by Loren Britton, Isabel Paehr, Jörn Röder and Kamran Behrouz alongside a live discussion with the mocap avatar and the participants of the workshop about the politics of FRS in India and on a global_scale.

@ common room, New Delhi (organized by Haus der Kulturen der Welt, Berlin)

©️Photo by Annette Jacob

Zürich – New Dehli Skype meeting at Common Room

Zürich – New Dehli Skype meeting at Common Room

[1] Picturing Algorithmic Surveillance: The Politics of Facial Recognition Systems by Lucas D. Introna and David Wood

[2] Donna J. Haraway; with paintings by Lynn M. Randolph, Modest Witness@ Second Millennium. FemaleMan meets OncoMouse : feminism and technoscience, New York, Routledge, 1997